About Me

I am currently working as a Principal Research Scientist at Nokia Bell Labs in Cambridge, UK and lead the Device Systems team.

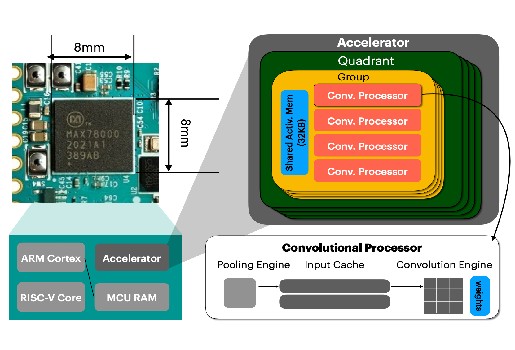

In my team, we work on building multi-device systems to support collaborative and interactive services. With an unprecedented rise of on/near-body devices, it is common today to find ourselves surrounded by multiple sensory devices. We explore system challenges and issues to enable multi-device, multi-modal, and multi-sensory functionalities on these devices, thereby offering exciting opportunities for accurate, robust and seamless edge intelligence.

My research interests include mobile and embedded systems, edge intelligence, tiny ML, Internet of Things (IoT), and social and culture computing. I enjoy building real, working systems and applications and like to collaborate with other domain experts for interdisciplinary research.